can handle concurrent conversations with enterprise-grade reliability. They have the following features:

can handle concurrent conversations with enterprise-grade reliability. They have the following features:

- Durable and atomic event handling: -backed event claiming ensures exactly-once processing, even under high concurrency and failure conditions

- Bounded concurrency: fixed worker pools prevent resource exhaustion while maintaining predictable performance under load

- Immediate event processing: provide real-time responsiveness. Events are processed within milliseconds of arrival rather than waiting for polling cycles

- Resilient retry logic: automatic retry with visibility thresholds, plus stuck or expired event cleanup

- Horizontal scalability: run multiple instances simultaneously with coordinated work distribution across all instances

- AI-Powered Responses: use the AI model of your choice, you can also integrate with MCP servers

- Extensible architecture: zero code integration for basic agents. For more specialized use cases, easily customize your agent using Jinja templates

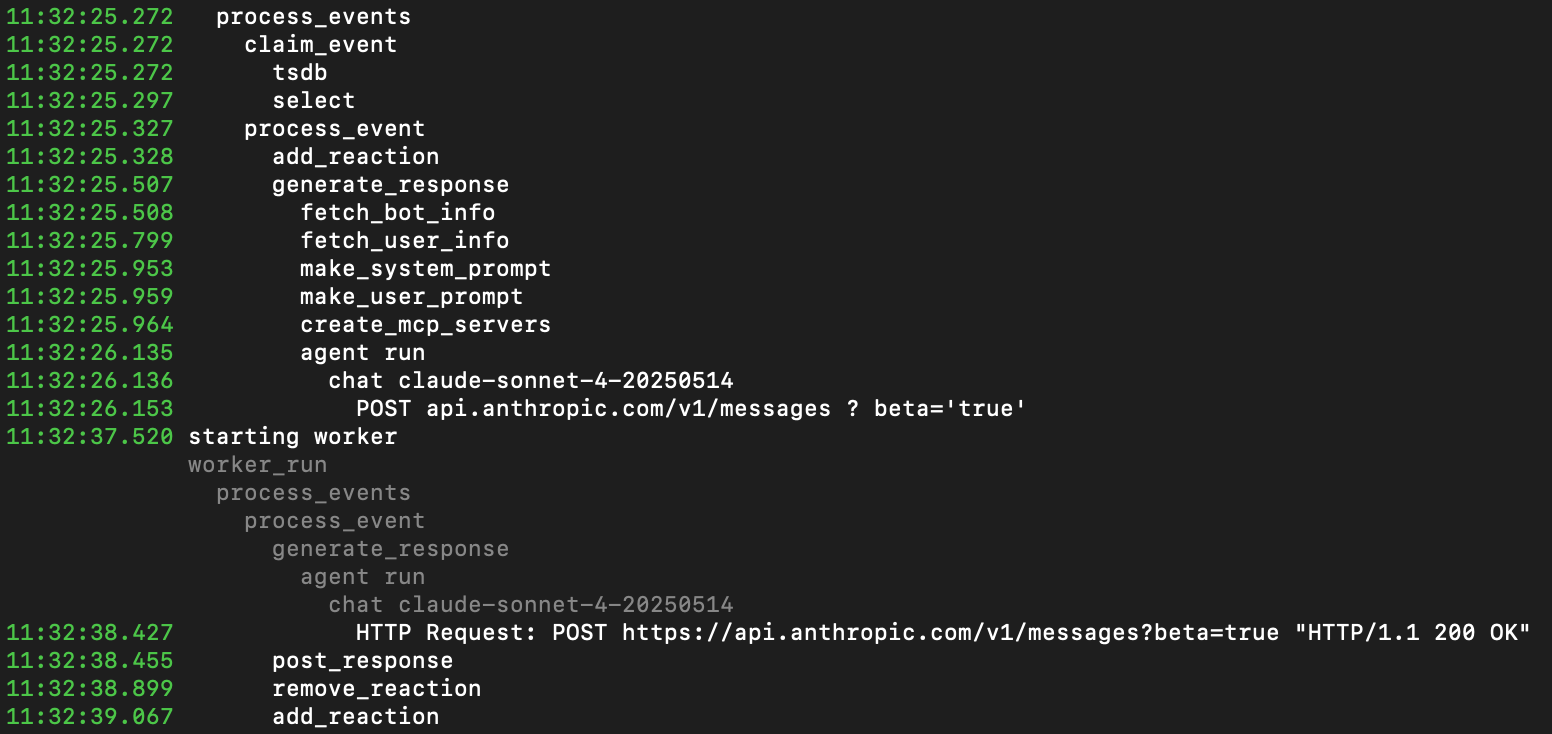

- Complete observability: detailed tracing of event flow, worker activity, and database operations with full Logfire instrumentation

Prerequisites

To follow the procedure on this page you need to:- Create a target . This procedure also works for .

- Install the uv package manager

- Get an Anthropic API key

- Optional: get a Logfire token

Create a Slack app

Before installing , you need to create a Slack app that the will connect to. This app provides the security tokens for Slack integration with your :-

Create a manifest for your Slack App

-

In a temporary directory, download the Slack manifest template:

-

Edit

slack-manifest.jsonand customize your name and description of your Slack App. For example: -

Copy the contents of

slack-manifest.jsonto the clipboard:

-

In a temporary directory, download the Slack manifest template:

-

Create the Slack app

- Go to api.slack.com/apps.

- Click

Create New App. - Select

From a manifest. - Choose your workspace, then click

Next. - Paste the contents of

slack-manifest.jsonand clickNext. - Click

Create.

-

Generate an app-level token

- In your app settings, go to

Basic Information. - Scroll to

App-Level Tokens. - Click

Generate Token and Scopes. - Add a

Token Name, then clickAdd Scope, addconnections:writethen clickGenerate. - Copy the

xapp-*token locally and clickDone.

- In your app settings, go to

-

Install your app to a Slack workspace

- In the sidebar, under

Settings, clickInstall App. - Click

Install to <workspace name>, then clickAllow. - Copy the

xoxb-Bot User OAuth Token locally.

- In the sidebar, under

Install and configure your instance

are a production-ready library and CLI written in Python that you use to create Slack-native AI agents. This section shows you how to configure a to connect to your Slack app, and give it access to your data and analytics stored in .-

Create a project directory

-

Create a environment with your Slack, AI Assistant, and database configuration

- Download

.env.sampleto a local.envfile:

- In

.env, add your Slack tokens and Anthropic API key:

- Add the connection details for the you are using for this :

- Save and close

.env.

- Download

-

Add the default prompts to your project

-

Install to manage and run your AI-powered Slack bots

-

Install the using uv.

tiger-agentis installed in~/.local/bin/tiger-agent. If necessary, add this folder to yourPATH. -

Verify the installation.

You see the help output with the available commands and options.

-

Install the using uv.

-

Connect your with Slack

-

Run your :

If you open the explorer in , you can see the tables used by your .

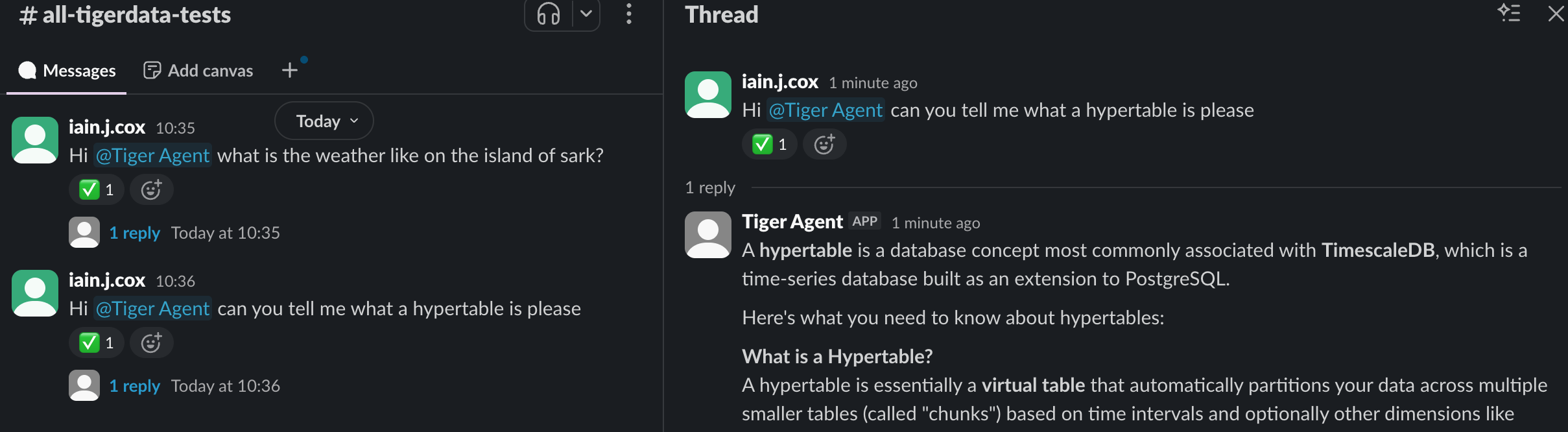

- In Slack, open a public channel app and ask a couple of questions. You see the response in your public channel and log messages in the terminal.

-

Run your :

Add information from MCP servers to your

To increase the amount of specialized information your AI Assistant can use, you can add MCP servers supplying data your users need. For example, to add the MCP server to your :-

Copy the example

mcp_config.jsonto your project Inmy-tiger-agent, run the following command: -

Configure your to connect to the most useful MCP servers for your organization

For example, to add the documentation MCP server to your , update the docs entry to the

following:

To avoid errors, delete all entries in

mcp_config.jsonwith invalid URLs. For example thegithubentry withhttp://github-mcp-server/mcp. -

Restart your

Customize prompts for personalization

uses Jinja2 templates for dynamic, context-aware prompt generation. This system allows for sophisticated prompts that adapt to conversation context, user preferences, and event metadata. uses the following templates:system_prompt.md: defines the AI Assistant’s role, capabilities, and behavior patterns. This template sets the foundation for the way your will respond and interact.user_prompt.md: formats the user’s request with relevant context, providing the AI Assistant with the information necessary to generate an appropriate response.

-

Update the prompt

For example, in

prompts/system_prompt.md, add another item in theResponse Protocolsection to fine tune the behavior of your s. For example: -

Test your configuration

Run with your custom prompt:

Advanced configuration options

For additional customization, you can modify the following parameters:--model: change AI model (default:anthropic:claude-sonnet-4-20250514)--num-workers: adjust concurrent workers (default:5)--max-attempts: set retry attempts per event (default:3)